Undergraduate Research in the IVILAB

The Interdisciplinary Visual Intelligence group has an active program for involving undergraduates in research. We strongly encourage capable and interested undergraduate students to become involved with research early on. Doing so is becoming increasingly critical for preparing for grad school and has also helped many students get jobs in industry.

Link to current opportunities and the application process.

Due to overwhelming interest in the IVILAB we need to limit the number undergraduate researchers. Unfortunately, we have to turn away many undergraduates looking for research experience.

Publishing (needs updating).

Undergraduate researchers working with IVI lab have a great record of contributing sufficiently to research projects that they become authors on papers. So far, twelve undergraduates have been authors on nineteen vision lab papers and three abstracts (needs updating). Click here for the list.

IVILAB undergraduate researchers past and present (needs updating).

Students who have participated in the IVILAB as undergraduates include Matthew Johnson (honor's student, graduated December 2003), Abin Shahab (honor's student, graduated May 2004), Ekaterina (Kate) Taralova (now at CMU), Juhanni Torkkola (now at Microsoft), Andrew Winslow (now at Tufts), Daniel Mathis, Mike Thompson, Sam Martin, Johnson Truong (now at SMU), Andrew Emmott (headed to Oregon State), Ken Wright, Steve Zhou, Phillip Lee, James Magahern, Emily Hartley, Steven Gregory, Bonnie Kermgard, Gabriel Wilson, Alexander Danehy, Daniel Fried, Joshua Bowdish, Lui Lui, Ben Dicken, Haziel Zuniga, Mark fischer, Matthew Burns, Racheal Gladysz, Salika Dunatunga (honors, now at U. Penn), Kristle Schulz (honors), and Soumya Srivastava.

Examples of undergraduate research in the IVILAB (needs updating)

Understanding Scene Geometry

The image(*) to the right shows undergraduate Emily Hartley determining the

geometry of an indoor scene and the parameters of the camera that took the

picture of the scene. Such data is

critical for both training and validating systems that automatically infer scene

geometry, the camera parameters, the objects within the scene, and their

location and pose.

The image(*) to the right shows undergraduate Emily Hartley determining the

geometry of an indoor scene and the parameters of the camera that took the

picture of the scene. Such data is

critical for both training and validating systems that automatically infer scene

geometry, the camera parameters, the objects within the scene, and their

location and pose.

![]() Funding for undergraduates provided by REU supplement to NSF

CAREER

grant IIS-0747511.

Funding for undergraduates provided by REU supplement to NSF

CAREER

grant IIS-0747511.

(*) Photo credit Robert Walker Photography.

Semantically Linked Instructional Content (SLIC)

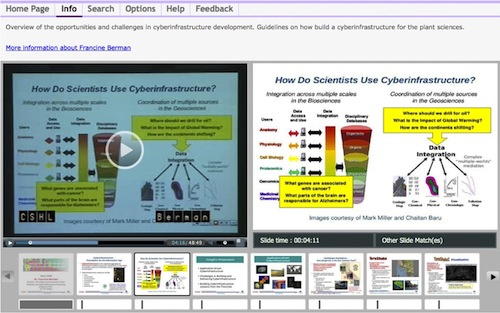

The image shows a screen shot of the SLIC educational video browsing system which

is an excellent project for undergraduates interested in multimedia and web design.

For more information see the

SLIC project page,

or contact

Yekaterina (Kate) Kharitoova

(ykk AT email DOT arizona DOT edu) for more

information. SLIC has led to several publications with undergraduate authors,

and currently several undergraduate students are working on it.

The image shows a screen shot of the SLIC educational video browsing system which

is an excellent project for undergraduates interested in multimedia and web design.

For more information see the

SLIC project page,

or contact

Yekaterina (Kate) Kharitoova

(ykk AT email DOT arizona DOT edu) for more

information. SLIC has led to several publications with undergraduate authors,

and currently several undergraduate students are working on it.

![]() SLIC is partly supported by NSF grant EF-0735191.

SLIC is partly supported by NSF grant EF-0735191.

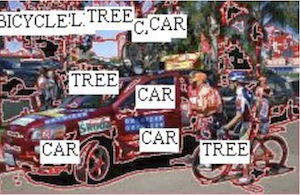

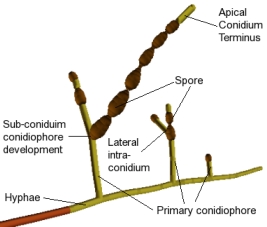

Aligning image caption words with image elements

There are now millions of images on-line with associated text (e.g., captions). Information in captions is either redundant (e.g., the word dog occurs, and the dog is obvious) or complementary (e.g., there is sky above the dog, but it is not mentioned). Redundant information allows us to train machine learning methods to predict one of these modalities from the other. Alternatively, complementary information in the modalities can disambiguate uncertainty (see "Word Sense Disambiguation with Pictures" below), or provide for combined visual and textual searching and data mining. Under the guidance of PHD student Luca del Pero, undergraduates Phil Lee, James Magahern, and Emily Hartley have contributed to research on using object detectors to improve the alignment of natural language captions to image data, which has already led to a publication for them. For more information on this project, contact Luca del Pero (delpero AT cs DOT arizona DOT edu).

![]()

![]() Funding for undergraduates provided by

ONR and

REU supplement to NSF

CAREER

grant IIS-0747511.

Funding for undergraduates provided by

ONR and

REU supplement to NSF

CAREER

grant IIS-0747511.

On the left is the baseline result; the image on the right shows the result with detectors.

On the left is the baseline result; the image on the right shows the result with detectors.

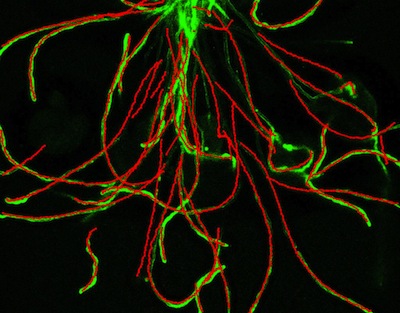

Simultaneously tracking many objects with applications to biological growth

Simultaneously tracking many objects with overlapping trajectories is hard

because you do not know which detections belong to which objects. The vision lab

has developed a new approach to this problem and has applied it to several kinds

of data. For example, the image to the right shows tubes that are growing out of

pollen specs (not visible) towards ovules (out of the picture) in an

Simultaneously tracking many objects with overlapping trajectories is hard

because you do not know which detections belong to which objects. The vision lab

has developed a new approach to this problem and has applied it to several kinds

of data. For example, the image to the right shows tubes that are growing out of

pollen specs (not visible) towards ovules (out of the picture) in an

This project is in collaboration with the Palanivelu lab.

![]() Funding for this project provided by NSF grant IOS-0723421.

Funding for this project provided by NSF grant IOS-0723421.

Identifying machine parts using CAD models

CAD models provide the 3D structure of many man made objects such as machine

parts. This projects aims to find objects in images based on these models.

However, since the data is most readily available as triangular meshes, 3D

features that are useful for matching 2D images must be extracted from mesh

data. Undergraduate Emily Hartley has contributed software for this task, and

undergraduate Andrew Emmott has contributed software for matching extracted 3D

features to 2D images. They have been mentored by PHD student

Luca del Pero.

For more information, contact

him (delpero AT

cs DOT arizona DOT edu).

CAD models provide the 3D structure of many man made objects such as machine

parts. This projects aims to find objects in images based on these models.

However, since the data is most readily available as triangular meshes, 3D

features that are useful for matching 2D images must be extracted from mesh

data. Undergraduate Emily Hartley has contributed software for this task, and

undergraduate Andrew Emmott has contributed software for matching extracted 3D

features to 2D images. They have been mentored by PHD student

Luca del Pero.

For more information, contact

him (delpero AT

cs DOT arizona DOT edu).

![]() Funding for undergraduates provided by

NSF Grant 0758596 and

REU supplement to NSF

CAREER

grant IIS-0747511.

Funding for undergraduates provided by

NSF Grant 0758596 and

REU supplement to NSF

CAREER

grant IIS-0747511.

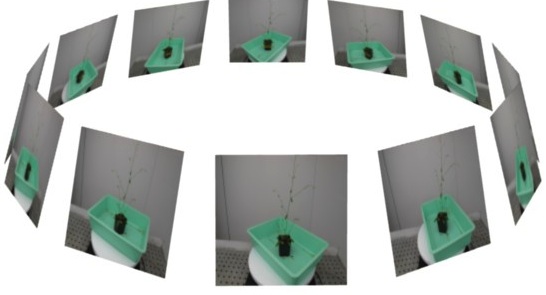

Inferring Plant Structure from Images

Quantifying plant geometry is critical for understanding how subtle details in

form are caused by molecular and environmental changes. Developing automated

methods for determining plant structure from images is motivated by the

difficulty of extracting these details by human inspection, together with the

need for high throughput experiments where we can test against a large number of

variables.

Quantifying plant geometry is critical for understanding how subtle details in

form are caused by molecular and environmental changes. Developing automated

methods for determining plant structure from images is motivated by the

difficulty of extracting these details by human inspection, together with the

need for high throughput experiments where we can test against a large number of

variables.

To get numbers for structure we fit geometric

models of plants to image data. The picture on the right shows multiple views of

an Arabidopsis plant (top), two views (bottom, left), and fits of the skeleton

to the image data, projected using camera models corresponding to those two

views. Undergraduates Sam Martin and Emily Hartley have helped collect the

image data, arrange feature extraction, and create ground truth data fits to it

for training and evaluation. This project is led by

Kyle Simek.

For more information, contact him

(ksimek AT cs DOT arizona DOT edu).

This project is in collaboration with the Palanivelu and Wing labs.

![]() Funding for undergraduates provided by

the NSF funded

iPlant

project, via

the University of Arizona UBRP program

and

an REU supplement to NSF

CAREER

grant IIS-0747511.

Funding for undergraduates provided by

the NSF funded

iPlant

project, via

the University of Arizona UBRP program

and

an REU supplement to NSF

CAREER

grant IIS-0747511.

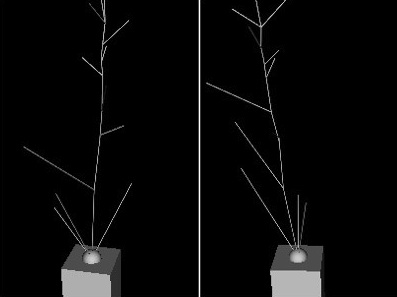

Modeling and visualizing Alternaria

To the right is a labeled model of the fungus Alternaria generated by a

stochastic L-system built by undergraduate researcher

Kate Taralova.

For more information, follow this

link.

To the right is a labeled model of the fungus Alternaria generated by a

stochastic L-system built by undergraduate researcher

Kate Taralova.

For more information, follow this

link.

This project is in collaboration with the Pryor lab.

![]() Support for undergraduates provided by TRIFF and a REU supplement to a

department of computer science NSF research infrastructure grant

Support for undergraduates provided by TRIFF and a REU supplement to a

department of computer science NSF research infrastructure grant

Inactive and Subsumed Projects

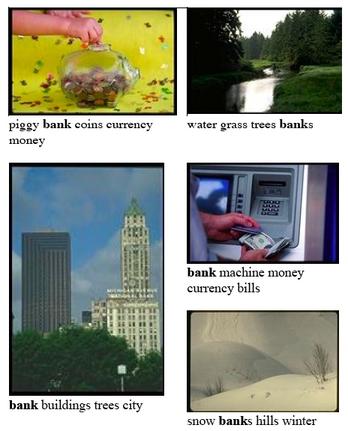

Word sense disambiguation with pictures

Many words in natural language are ambiguous as illustrated here by the word

"bank". Typically, resolving such ambiguity is attempted by looking at nearby

words in the passage. Computer vision lab undergraduate researcher Matthew Johnson played a key role in

the development of a novel method for adding information from accompanying

illustrations to help reduce the ambiguity. The system learns from a data base

of images that certain word senses (e.g., meanings of bank found with outdoor

photos), are associated with certain kinds of image features. This association

is then used to incorporate information in illustrations to help disambiguate

the word under consideration. This work led to two publications for Matthew.

Many words in natural language are ambiguous as illustrated here by the word

"bank". Typically, resolving such ambiguity is attempted by looking at nearby

words in the passage. Computer vision lab undergraduate researcher Matthew Johnson played a key role in

the development of a novel method for adding information from accompanying

illustrations to help reduce the ambiguity. The system learns from a data base

of images that certain word senses (e.g., meanings of bank found with outdoor

photos), are associated with certain kinds of image features. This association

is then used to incorporate information in illustrations to help disambiguate

the word under consideration. This work led to two publications for Matthew.

![]() Support for undergraduates provided by

TRIFF and a

REU supplement to a department of computer science NSF research infrastructure

grant.

Support for undergraduates provided by

TRIFF and a

REU supplement to a department of computer science NSF research infrastructure

grant.

Vision system for flying robots

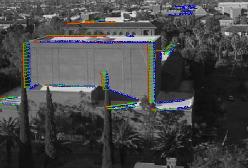

These three images illustrate work by computer science students on

a UA multi-department effort to compete in international aerial robotics

competition which is largely an event for undergraduates. Here computer

controlled planes and/or helicopters work towards accomplishing a mission

specified by the contest organizers. Part of the current task is to find a

building having a particular symbol on it (left), and identify the doors and

windows of that building, and then identify which doors and windows are open so

that a sub-vehicle can be launched through the portal. The middle figure shows

the symbol identification software being tested from a moving vehicle to

simulate flight. The far right figure shows a view from the computer science

department with lines found in this image and the matching lines found in a

companion image. The students use the shift (shown in green) between matching

edges to estimate the distance to the edge, which is used to help analyze the

structures. Images provided by undergraduate researcher

Ekaterina (Kate) Taralova (now at CMU).

These three images illustrate work by computer science students on

a UA multi-department effort to compete in international aerial robotics

competition which is largely an event for undergraduates. Here computer

controlled planes and/or helicopters work towards accomplishing a mission

specified by the contest organizers. Part of the current task is to find a

building having a particular symbol on it (left), and identify the doors and

windows of that building, and then identify which doors and windows are open so

that a sub-vehicle can be launched through the portal. The middle figure shows

the symbol identification software being tested from a moving vehicle to

simulate flight. The far right figure shows a view from the computer science

department with lines found in this image and the matching lines found in a

companion image. The students use the shift (shown in green) between matching

edges to estimate the distance to the edge, which is used to help analyze the

structures. Images provided by undergraduate researcher

Ekaterina (Kate) Taralova (now at CMU).

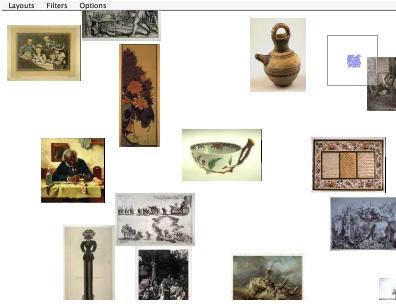

Browsing large image collections

A screen shot of a program for browsing large digital art image databases that

is being developed by undergraduate students in computer science at the U of A.

(Art images courtesy of the Fine Arts Museum of San Francisco).

Contributions to this project have been made by undergraduates

Matthew Johnson and John Bruce.

A screen shot of a program for browsing large digital art image databases that

is being developed by undergraduate students in computer science at the U of A.

(Art images courtesy of the Fine Arts Museum of San Francisco).

Contributions to this project have been made by undergraduates

Matthew Johnson and John Bruce.

Evaluation of image segmentation algorithms

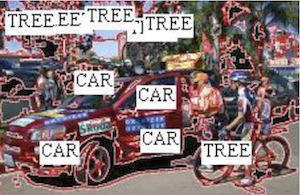

Two images which have been segmented by three different methods. U of A

undergraduate students in computer science are involved in research to evaluate

the quality of such methods. Segmentation quality is quantified by the degree to

which the regions are useful to programs which automatically recognize what is

in the images. Contributions have been made by Abin Shahab.

Two images which have been segmented by three different methods. U of A

undergraduate students in computer science are involved in research to evaluate

the quality of such methods. Segmentation quality is quantified by the degree to

which the regions are useful to programs which automatically recognize what is

in the images. Contributions have been made by Abin Shahab.